[ 3d Reconstruction For End Users] A I Driven Visual Effect Studio

3D Scene Reconstruction System for given End-User Videos.

“Do you have any moment to remember forever? Just take a video. Our AI-powered solution brings your moment into a virtual 3D world permanently.”

Thanks to an experience participating in the NGR-CO3D challenge, we started developing an automatic AI-driven 3D scene reconstruction system for end-users. Here we describe the resulting prototype.

Inputs

Just take a video, and then upload it to us something like that:

Outputs

Our solution automatically processes end-users videos, and then provides an experience where the end-users browse their scenes in 3D (click below):

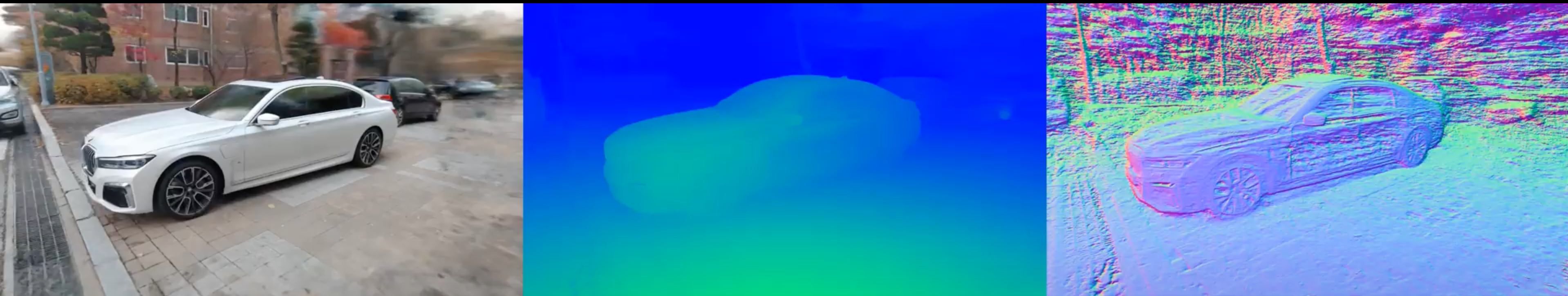

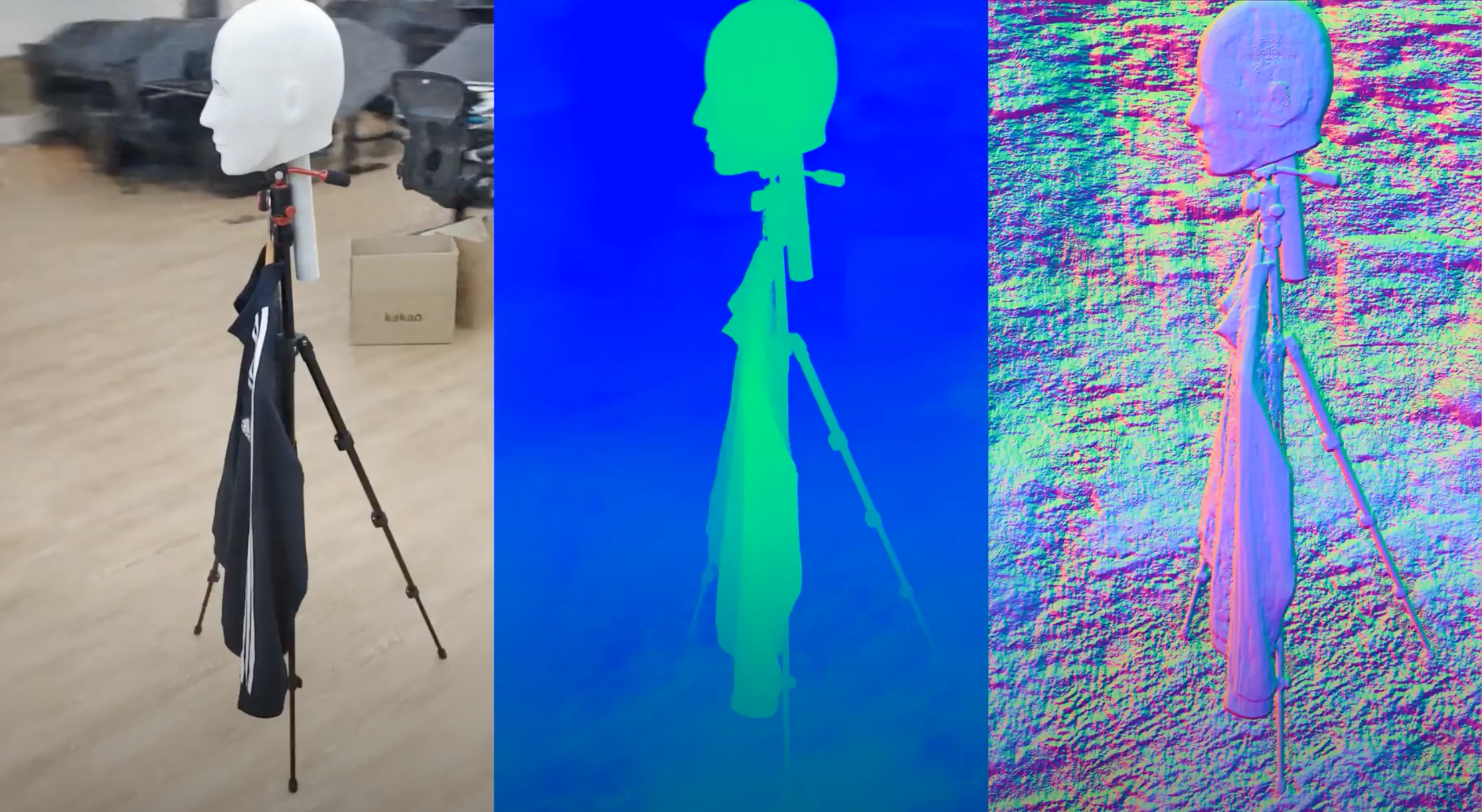

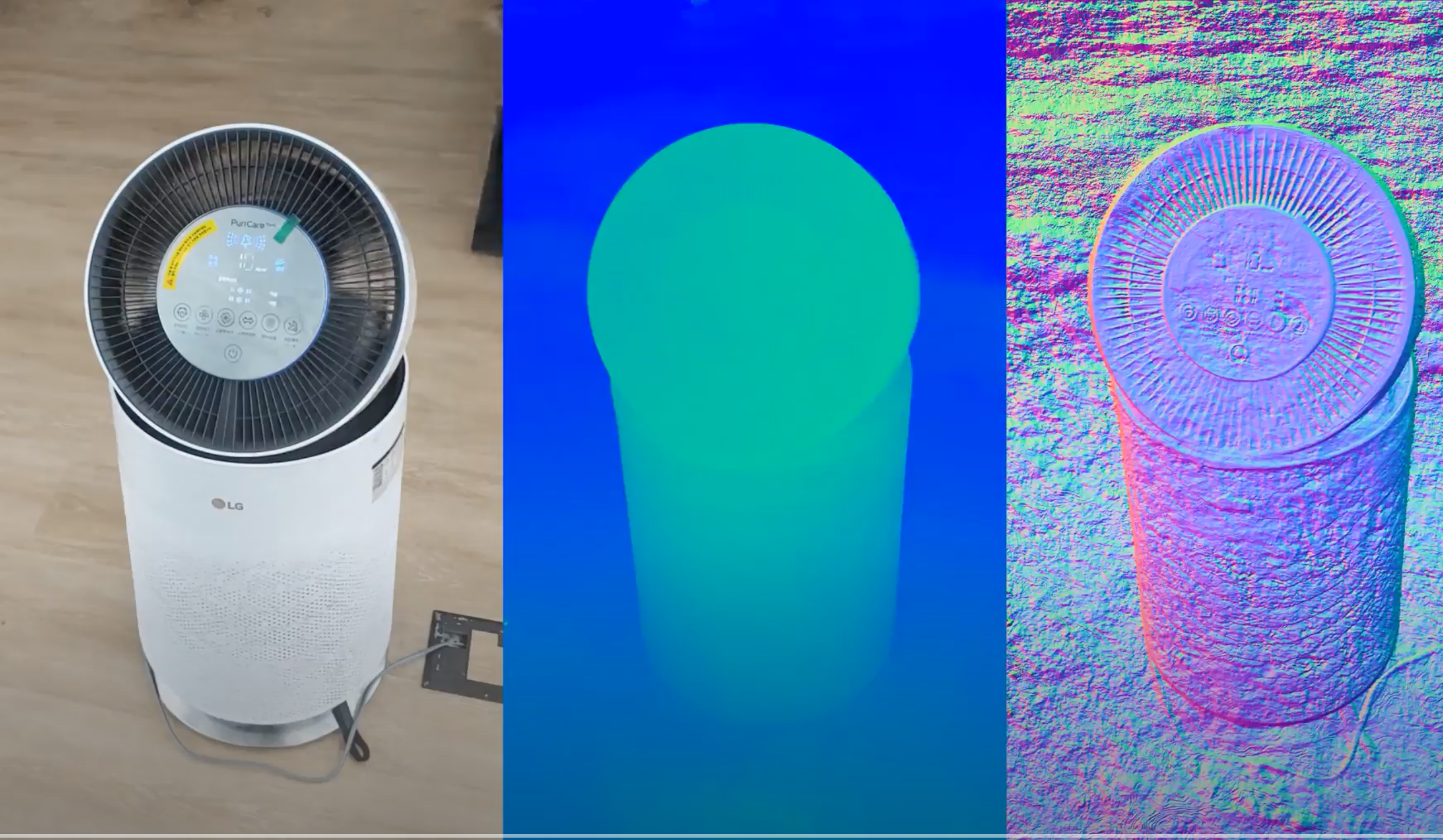

Order in novel-view RGB, Depth, and Surface-normal

One’s favorite Car (make sure the full screen mode)

Clothes

Clothes

Object

Object

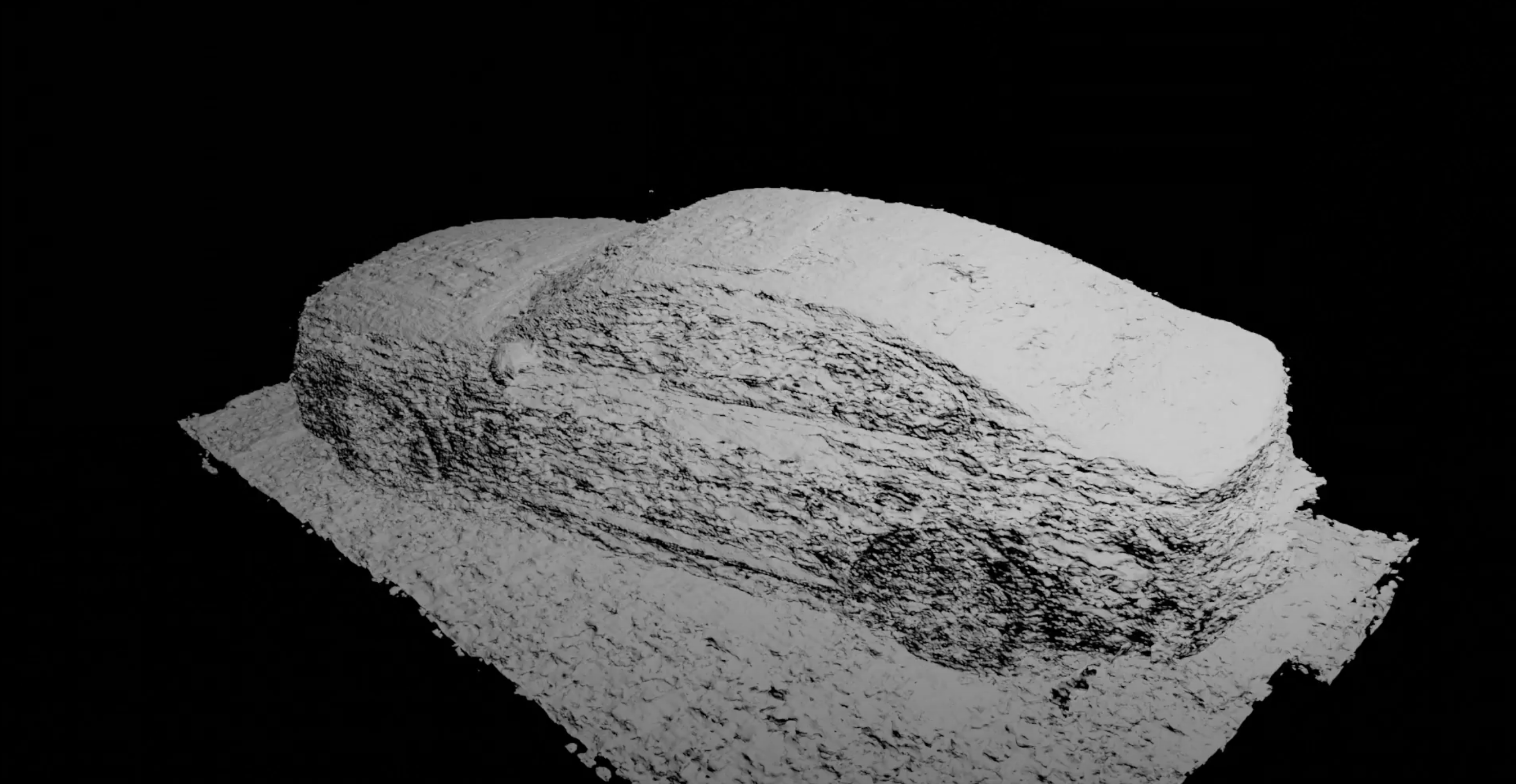

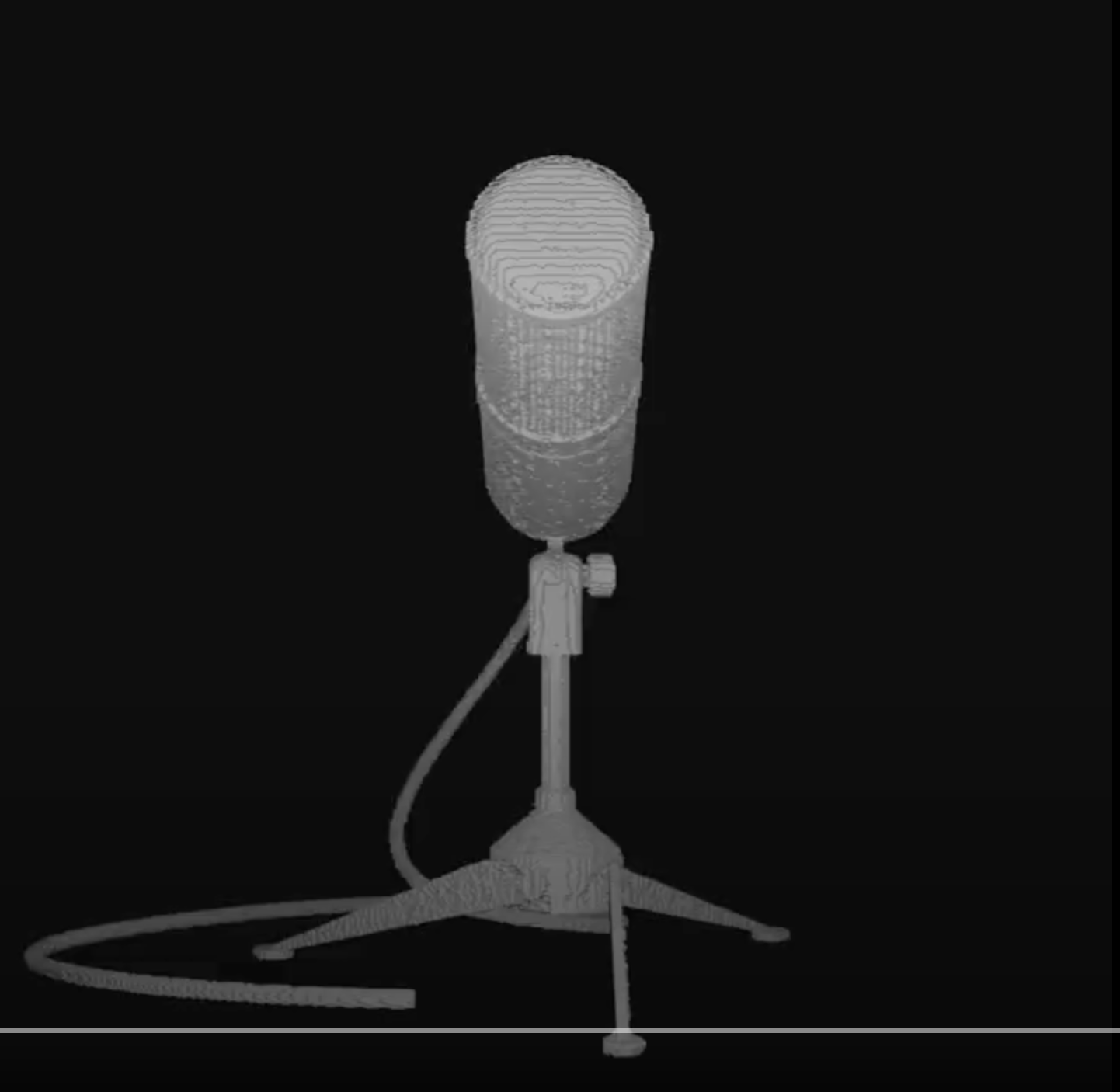

After finishing train our model, we can extract a 3D mesh via marching-cube algorithm (click below):

(To render these extracted meshes, we used Blender’s EEVEE rendering engine.)

(To render these extracted meshes, we used Blender’s EEVEE rendering engine.)

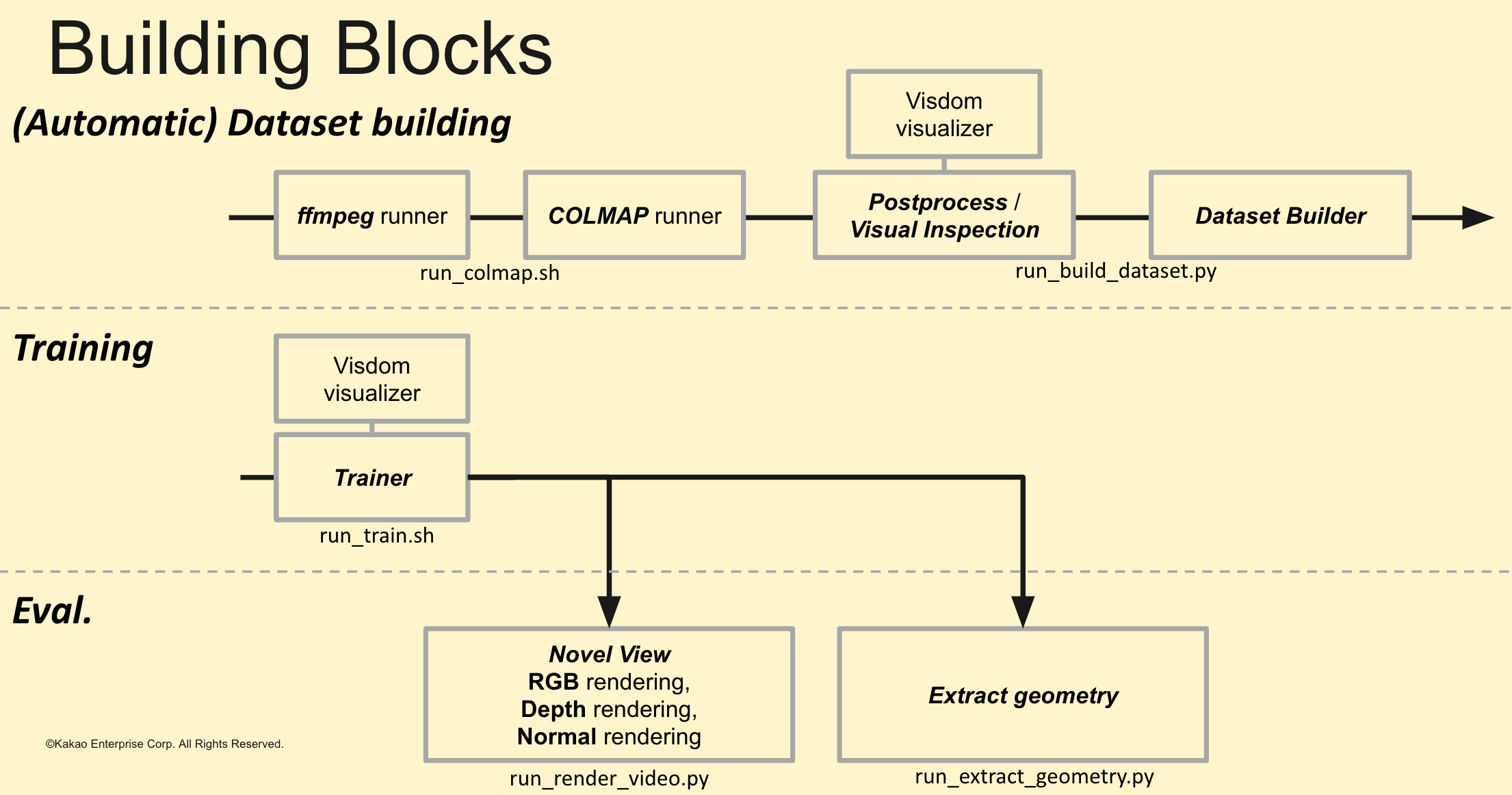

System Building Blocks

Our system consists of major three stages i.e. 1) Automatic Dataset Building, 2) Training, and 3) Eval.

- Automatic Dataset Building

– Our solution splits an input video into N number of frames and then executes an off-the-shelf Structure-from-Motion (SfM) package (COLMAP in our case) to estimate camera-to-world pose parameters, i.e., camera intrinsic (focal length, principle point, and distortion) and extrinsic (camera rotation and translation).

– Miscellaneous information is also acquired by pre-trained vision networks, e.g., semantic segmentation mask, face parsing mask, etc. - Train

– Our Implicit Neural Network is trained for the train datasets built by the previous stage. - Eval

– Given a trained implicit renderer, ray-sampler, and train dataset, we compose a virtual camera trajectory for novel-view synthesis off-line.

Keywords:

Neural Rendering, Neural Radiance Field (NeRF), Visual Effect Studio, 3D Reconstruction